Over the past decade, Artificial Intelligence (AI) has swiftly advanced from a, relatively, niche research area into a transformative force reshaping industries worldwide. According to the US International Trade Administration, the UK AI market was valued at over $21 billion last year and is projected to reach $1 trillion by 2035. Analysis by Sopra Steria Next, based on available research, predicts that global AI spending will reach $1.27 trillion in 2028.

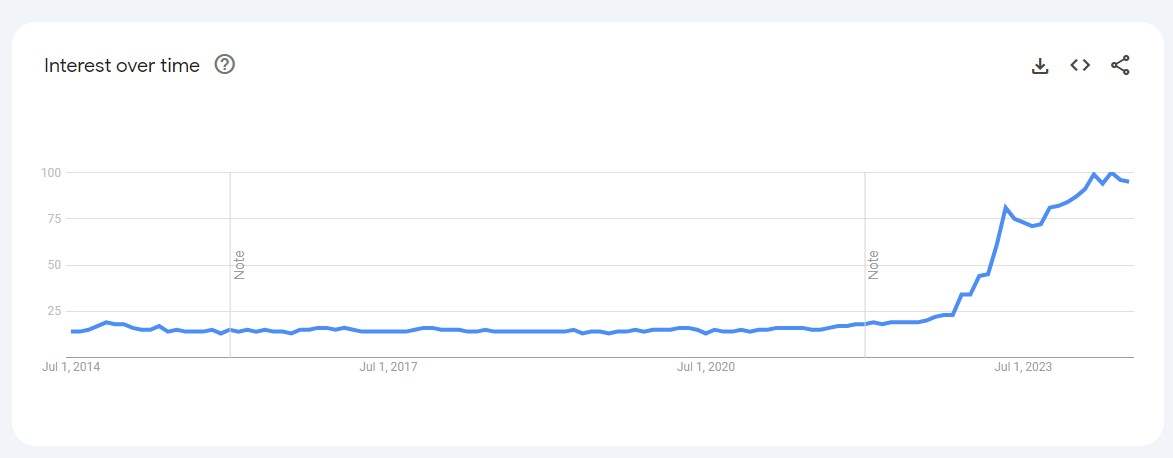

Today, this growing market projection clearly demonstrated. It’s almost impossible to browse LinkedIn without encountering a plethora of opinion pieces on the subject. (Here’s to one more!) Noise which is backed up by statistics provided by

Google Trends that shows an increase in “Interest over time” for “AI” search terms from 14 in July

2014 to 98 in July 2024.

As organisations strive to remain competitive and drive efficiencies, the question is no longer whether to adopt AI, but how to best prepare for and leverage it effectively. To do this, it’s essential to first understand the current state of AI.

Then, its potential applications, what an organisation must get right to adopt it safely, and how to industrialise the process of iteratively delivering value led use cases.

Understanding the current state of AI

First, what do we mean by AI? For the purposes of this piece, we will refer to the definition adopted by the European Parliament,

which states:

‘AI system’ means a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how

to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.

Currently, when most people think about AI they’re usually thinking about Generative AI, a broad category of AI systems that generate new content including text, images, music, videos and more. More specifically, and in the case of juggernauts such

as Chat GPT, BERT and Claude, they are thinking of Large Language Models (LLMs), a specialised type of AI model and subset of Generative AI. They make use of Natural Language Processing (NLP) to generate new humanlike text-based content in response

to prompts provided to them.

To help make sense of AI and the vast array of systems in existence today, these systems are usually categorised in two primary ways: by their capability (what the system can do) and by their functionality (how the system achieves its purpose), type -1

and type -2 respectively. Given the nature of this classification, an AI system and/or technology will be found in multiple categories.

No AI system that exists today has managed to surpass Artificial Narrow Intelligence (ANI), where AI systems are designed for specific tasks. As such, the Generative AI tooling of today is not able to apply human-like critical thinking and, going further,

is prone to errors – for example citing non-existent sources. While advancements continue with breakthroughs almost weekly, true Artificial General Intelligence (AGI) and beyond remain future milestones and the target of research institutes,

big tech players, and governments.

Organisations must therefore focus on optimising their use of current AI technologies such as Machine Learning (ML), NLP and Deep Learning to name but a few, while positioning themselves best to take advantage of any future developments as they are released.

Developments which are almost certain to materialise if the likes of Raymond Kurzweil (American computer scientist and author of ‘The Singularity Is Near’ and ‘The Singularity is Nearer’), Sam Altman (CEO of OpenAI), and Shane

Legg (cofounder of DeepMind Technologies, now Google DeepMind) are to be believed.

If this all seems overwhelming, we’re here to help!

Preparing for AI: the six pillars of readiness

How then do organisations prepare themselves?

At Sopra Steria Next, our AI Readiness Assessment evaluates an organisation's preparedness across six critical pillars: Strategy, Governance & Ethics, Infrastructure, Data, Expertise, and Culture.

1. Strategy: setting the vision

For any organisation looking to leverage AI, the first step is to develop a clear, comprehensive, and cohesive strategy. To do this, the wider organisation must be taken on the journey and inspired – being assured of the benefits to them, their

business units / departments, and their roles, and guaranteed of an ethical approach that emphasises transparency and fairness, promoting trust. This involves understanding the specific ways AI can enhance an organisation’s business processes,

support employees in their day-to-day tasks, improve decision-making, and create value at every level of the organisation.

Crucially, a well-defined AI strategy must align with the overall business goals and should start with a co-designed vision, one which sets out how an organisation intends to leverage AI to deliver value. As such, the wider organisational strategy, short-

and long- term goals, and challenges must be reviewed and understood before committing pen to paper, or fingers to keyboards. Through this understanding a series of measurable AI objectives should be defined and a roadmap for AI adoption produced

– one that details measurable short- and long-term objectives, resource allocation, and a prioritised list of high-value use cases, never losing sight of those organisational goals and values. Finally, this strategy should account for any potential

risks and challenges, ensuring mitigation plans are in place.

Through implementing a solid AI strategy, organisations are best equipped to navigate the complexities of AI adoption and positioned to maximise the benefits it affords.

2. Compliance, Governance & Ethics: ensuring responsible AI

With the power of AI comes the responsibility to use it ethically and transparently. Organisations must establish robust governance frameworks that address issues such as bias, fairness, accountability, and compliance with regulatory standards.

The European Union (EU) AI Act, “the world’s first comprehensive AI Law” establishes a risk-based approach to AI systems. Those AI systems deemed to be ‘unacceptable risk AI systems’ will be banned, effective 6-months after the act was adopted into March 2024, meaning those systems posing an unacceptable risk will be banned from September 2024.

Following Brexit, it would be reasonable to assume that the EU AI Act does not apply to organisations operating out of the UK; however, this is not the case. The act itself is extra-territorial meaning that it applies to organisations that supply AI systems

into the EU as well as foreign systems whose output enters the EU market. To further strengthen this point, Keir Starmer’s new Labour government stated that “it will seek to establish the appropriate legislation to place requirements on

those working to develop the most powerful artificial intelligence models” via the King’s speech delivered on the 17th of July 2024.

A UK colleague of mine, Nicholas Wild – Head of Digital Ethics, went into further detail around this topic in his recently published blog titled ‘How to use AI for an Ethical and Sustainable Future’, speaking to the importance of not only ethical and sustainable AI but also how AI should work towards ethical, sustainable, and equitable outcomes.

As a result, it is crucial that any readiness assessment takes existing and forthcoming legislation into account, reviews current AI systems and develops future AI systems in a manner that is ethical by design, upholding ethical principles and legal requirements,

and fostering trust among stakeholders.

3. Technology & Infrastructure: building the foundation

To adopt AI successfully and effectively, organisations require a solid technological foundation. This means organisations must invest in the right infrastructure to support additional, and potentially larger, AI workloads. This includes cloud computing

resources, data storage solutions, and high-performance computing/processing capabilities. All of these come with substantial energy requirements that should be managed as part of an AI strategy. Indeed, any potential increase to workloads and changes

to ways of working introduces and multiplies concerns with existing IT systems; can they handle these increased demands? Will they scale where needed? How reliable will they be? What additional impact would this have on the environment, will it have

a positive impact overall, and how can we mitigate or offset that? This is something Yves Nicolas, Sopra Steria Deputy Group CTO & AI Programme Directory, talks about in his blog ‘GenAI – and what if it was mostly a question of impact?’ Is the right security in place to prevent malicious actors or to prevent the wrong information (sensitive, commercial, intellectual property,

etc.) leaving the estate?

In addition, any AI strategy must cohere with the broader technology strategy (assuming one exists) and should be assessed to ensure compatibility and interoperability with those aforementioned existing systems/applications on the estate. Such alignment

helps to minimise disruptions and maximise the efficiency of future implemented AI systems. Therefore, a modern, flexible infrastructure should enable organisations to experiment with new, different AI tooling and platforms, scaling resources as needed,

without introducing undue risk upon the organisation. Moreover, it affords organisations the opportunity to implement those robust security measures and is essential to protecting sensitive data and AI systems from potential threats.

4. Data: fuelling AI with quality data

Data is the lifeblood of AI. To harness the full potential of AI, it needs to be clean, well-managed, trusted, and as free of bias as possible. Organisations must prioritise data management and quality and implement a robust data governance framework

which is critical to maintaining data integrity, privacy, and security. Moreover, consolidating data from various, diverse sources (something I touched on in a blog post last year titled 'Empowering data-driven insights in highly secure environments'), ensuring it represents different demographics and perspectives, and implementing relevant data pipelines helps to ensure data is clean, fair,

accurate, and accessible to the right, authorised people.

I’m sure most people reading this piece have tried to produce data visualisations or analytics using dirty data from multiple sources and wear the scars of being forced to start from scratch. Why would this be any different?

As I highlighted in my previous piece on the Common Data Model (CDM), effective data management can significantly enhance self-service analytics, enabling faster decision-making and operational efficiency within secure environments.

Ensuring data quality and accessibility across the organisation allows for more accurate AI models and insights, driving better outcomes.

5. Expertise: cultivating the right talent

Much like a ship, you need the right people to make the thing sail – engineers to keep the engines running, captains to set the course, deck hands to clean the deck. Organisations need to invest in the right people and expertise to realise a successful

adoption.

A skilled and diverse workforce is needed that can develop, implement and manage AI systems and technologies, ranging from Data Engineers to build data pipelines to User Researchers who can help uncover potential use cases, and everything in between.

A diverse team that that is representative of those impacted by and users of these systems, and who brings varied perspectives which is essential in mitigating biases that can arise from more homologous groups. Therefore, organisations must continue

to nurture existing talent, training and upskilling, as well as hiring in new people with specialised AI skills. Specialised skills which afford organisations with more control over those AI systems which they choose to adopt.

Organisations must foster a culture of continuous learning and innovation (spoilers intended), promote collaboration with external experts and academic institutions to glean valuable insights accelerating maturity, and identify AI champions who can lead

initiatives and advocate for AI adoption.

I’m a firm believer that people, and the skills, knowledge and experience they bring, are every organisations most valuable asset. Without them, the ship would sink.

6. Culture: fostering an AI-ready mindset

Finally, organisational culture plays a pivotal role when looking to adopt any new technology, and even more so in the case of AI. If people feel that AI will impact their livelihood in any way, they will be unlikely to help sail the metaphorical ship.

Therefore, adopting AI requires people to embrace AI. For people to embrace AI, there must be a culture of transparency. To nurture this, organisational leadership must be honest with their intentions, clear in their values and goals, and actively champion

AI initiatives – recognising and celebrating AI-driven success stories. Through doing so, they act as catalysts, building support and accelerating momentum for ongoing and future AI initiatives across the organisation.

However, nurturing transparency alone is not enough. Organisations must create an environment where employees feel secure in their positions and empowered to experiment with AI and propose new ideas / use cases. Through sharing and learning from both

the successes and failures of their own and others, and promoting knowledge sharing, organisations can foster a culture of innovation and accelerate their AI maturity.

Industrialising AI use cases

According to the Office for National Statistics (ONS) Business and Insights Condition Survey (BICS) conducted in 2023, “approximately one in six businesses (16%) are currently implementing at least one of the AI applications asked about in the survey.” That same survey explored planned future use and found that 13% of businesses specified

at least one planned use, 15% were unsure about future use, and 74% responded that the question was not applicable to their business.

These figures are small, and it is worth noting that businesses with less than 10 employees were surveyed and that the questions asked may not have been as encompassing as needed, which may be impacting these results – something acknowledged by

the ONS in their write up.

So, why bring this all up?

Well, the theoretical decrease in adoption points towards an interesting conundrum – once you have implemented some form of AI (which may or may not be realising value), how do you continue to find new use cases, make a successful business case

for them, and iteratively deliver those AI use cases?

Through an AI factory

The concept of a digital ‘factory’ isn’t a new one – organisations have been setting them up under different guises for decades. A digital ‘garage’ being my personal favourite, evoking Silicon Valley-esque imagery of

isolated innovators forcing through change at pace. Joking aside, common themes across all models involve a value-led approach, where ideas / use cases are rapidly investigated, prototyped and piloted with go/no-go decision points that enable teams

to fail fast where needed.

At Sopra Steria Next, our AI Factory model enshrines a value-oriented approach and one that is linked to existing organisational structures and infrastructures. As such, the following five considerations are proffered as guiding principles for establishing

an organisational AI Factory:

- Prioritise business use cases by value.

- Connect the AI Factory with what already exists.

- Deliver value incrementally and fail fast.

- Combine the power of AI and Generative AI.

- Nurture the talent within your organisation.

- Building a future ready organisation

As organisations strive to be the first to win the coveted crown of developing the first truly Artificial General Intelligence (AGI), those around them must proactively prepare to leverage its transformative potential. Without putting in the hard yards

now to adopt AI technologies in their current states, the gap between those organisations which have and have not, is likely to widen at an exponential rate.

Therefore, as AI continues to revolutionise industries, organisations must proactively prepare to leverage its transformative potential. By focusing on the six pillars of Strategy, Governance & Ethics, Infrastructure, Data, Expertise, and Culture,

organisations can build a solid foundation for AI adoption, providing the groundworks for an AI Factory that will incrementally deliver value in an ethical and safe way.

Empower your organisation to harness the power of AI, drive innovation, and achieve sustainable growth in an ever-evolving landscape.

Reach out to Gethin Leiba, Consulting Manager- Data & AI or Becky Davis,

Director of AI, to find out more about our AI Readiness Assessment to evaluate whether your organisation is ready for AI.